|

I am a first year PhD student at Carnegie Mellon University (Robotics Institute) advised by Prof. Jeff Schneider. Prior to this, I pursued my undergraduate studies in Automation at Tsinghua University, where I had the privilege of working alongside Prof. Jun Zhu. I am always open to new research collaborations. Please feel free to reach out if our interests align. Email / Google Scholar / Github

|

|

|

My research interests focus on LLM agents, deep reinforcement learning, and their applications in decision-making and robotics. |

|

Wen-Tse Chen, Jiayu Chen, Hao Zhu, Jeff Schneider, Proposed a multi-turn reinforcement learning framework built for long-horizon LLM-agentic tasks with highly variable episode lengths. |

|

Wen-Tse Chen, Jiayu Chen, Fahim Tajwar, Hao Zhu, Xintong Duan, Russ Salakhutdinov, Jeff Schneider NeurIPS 2025 Presented a sample-efficient method for online fine-tuning LLM agents by using in-context learning to convert sparse feedback into dense signals, enabling LLMs to adapt to dynamic environments with minimal data. |

|

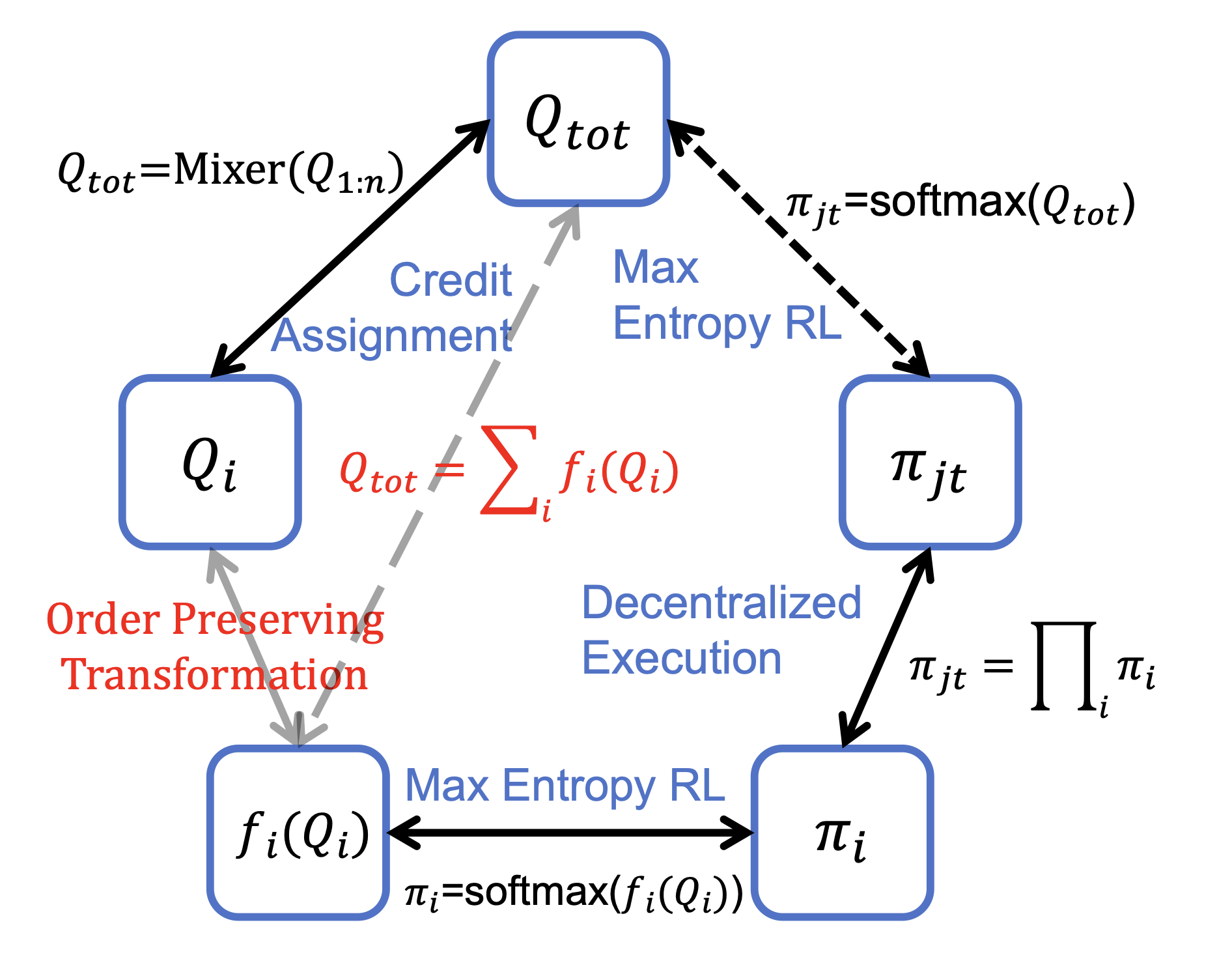

Wen-Tse Chen, Yuxuan Li, Shiyu Huang, Jiayu Chen, Jeff Schneider, AAMAS 2026 Enhanced IGM-based algorithms with maximum entropy RL for better exploration, ensuring locally optimal actions match global optima via an order preserving transformation, achieving SOTA performance on SAMC-v2 and Overcooked benchmark. |

.gif)

|

Wen-Tse Chen, Shiyu Huang, Yuan Chiang, Tim Pearce, Wei-Wei Tu, Chen Ting, Zhu Jun, AAAI 2024 Proposed an on-policy framework for discovering multiple diverse optimal strategies for the same task in a single training process. |

|

last update: Feb 2026 |

|

Copy from Dr. Jon Barron's page. |